Why Traceability Matters in the Generative AI Age

Discover why traceability is the safety net that makes AI usable in professional documentation

As organizations adopt generative AI to help create, retrieve, or transform technical content, the need for traceability has become impossible to ignore. Writers, editors, and content strategists now live in a world where AI can draft a procedure, summarize a release note, or answer a customer’s question using your documentation. That convenience comes with a new responsibility: understanding how the system arrived at its answer.

👉🏼 This is where traceability comes in.

What Traceability Means in Generative AI

Traceability is the ability to follow the path from an AI-generated output back to the ingredients that produced it.

This includes:

Which source documents the system used

Which model or model version generated the response

What prompts or instructions shaped the output

Which agents or intermediate steps contributed

How the content was transformed along the way

👉🏼 Think of it as an audit trail for generative AI.

When someone asks, “Why did the system say this?”, traceability lets you respond with specifics instead of guesses.

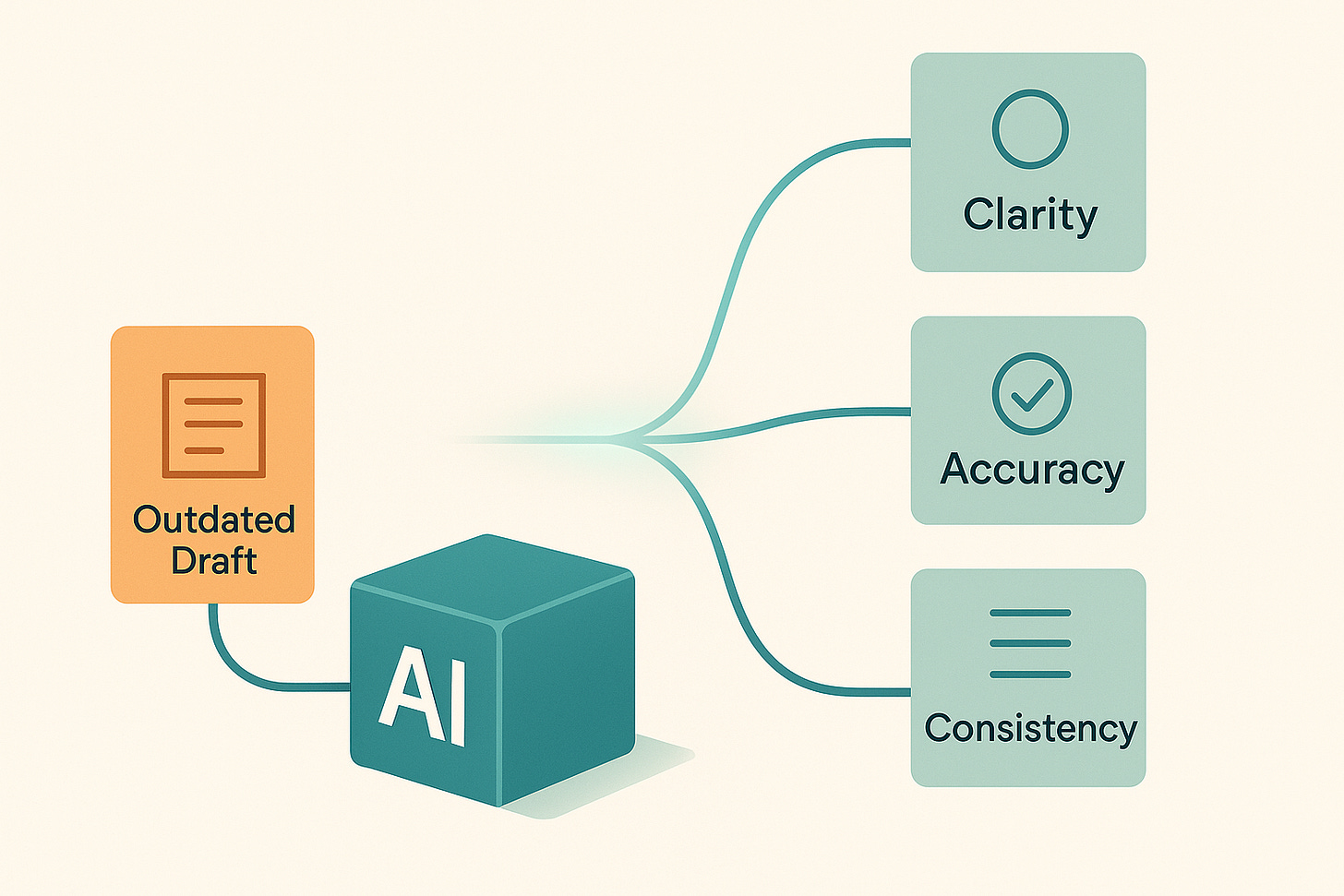

Why Traceability Should Matter to Technical Writers

Technical writers are already responsible for clarity, accuracy, and consistency. Generative AI doesn’t change that — it amplifies it. When AI enters the workflow, writers must ensure the system uses the right source content and produces outputs that reflect the approved truth.

👉🏼 Traceability makes that possible.

With traceability, you can confirm whether an answer came from a vetted topic or from an outdated draft lurking in a forgotten folder. You can determine whether the system hallucinated a detail or faithfully retrieved information from your component content management system (CCMS). You can see which metadata tags influenced retrieval and whether an agent followed your instructions or drifted off course.

Without traceability, you’re left guessing — not a great position when accuracy is part of your job description.

The Role of Traceability in Governance and Compliance

Many writers work in environments where rules matter: medical devices, finance, transit, telecom, cybersecurity, manufacturing. In these contexts, every published statement must be backed by a controlled source.

Related: The Ultimate Guide to Becoming a Medical Device Technical Writer

As AI becomes part of content production, reviewers, auditors, and regulators will ask legitimate questions:

Was this answer based on approved documentation?

Did the system use any restricted content?

Can you prove the output followed the organization’s processes?

👉🏼 Traceability provides the evidence.

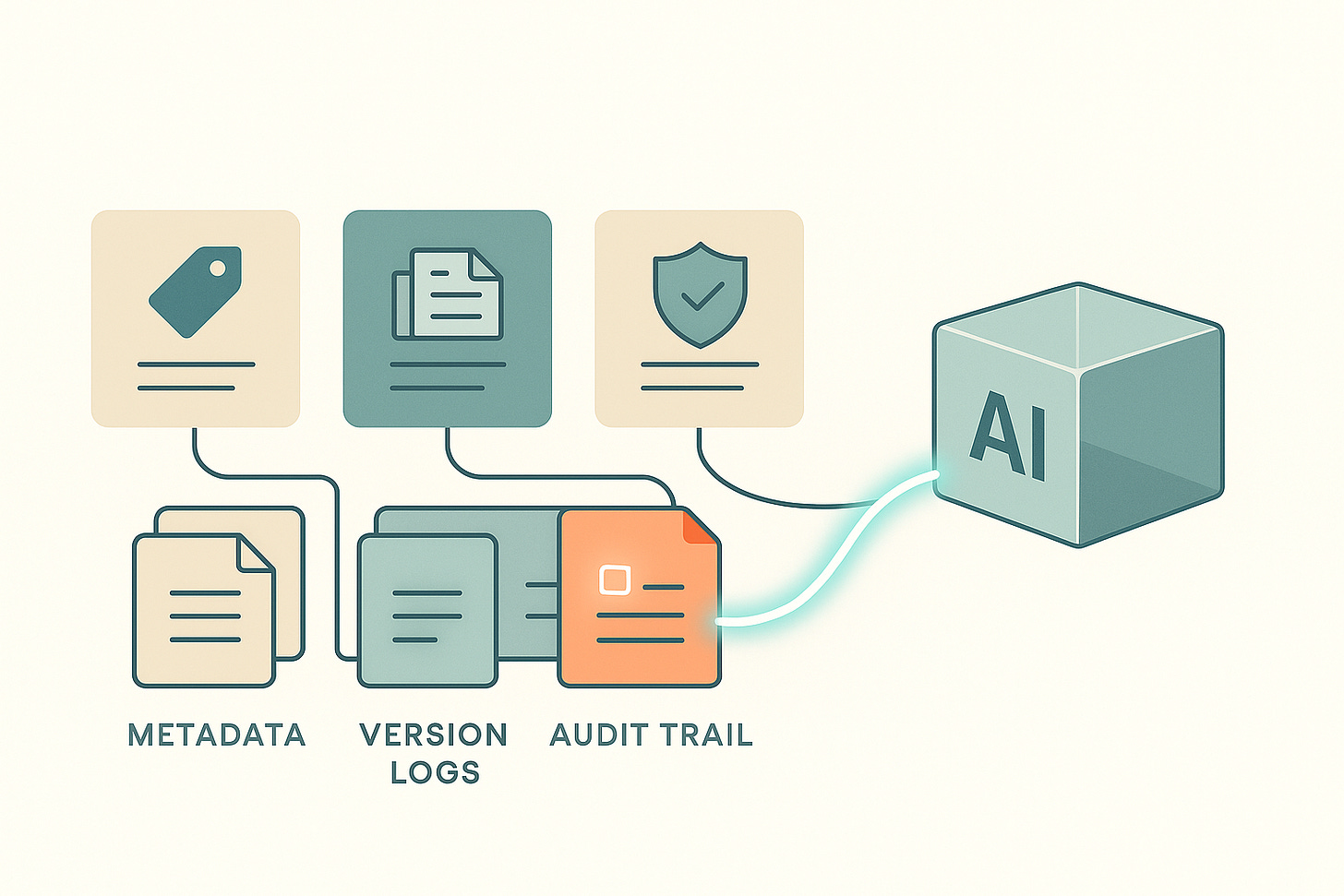

It becomes a natural extension of version control, metadata, review workflows, and other content governance practices writers already use.

Traceability Helps You Debug AI Systems

When a retrieval-augmented generation (RAG) system or an AI agent produces something incorrect, traceability tells you where the failure occurred.

Maybe the retrieval logic surfaced the wrong topic

Maybe the metadata on a source file was inaccurate

Maybe an AI agent misinterpreted the instruction chain

👉🏼 Traceability turns debugging into a structured process instead of a guessing game.

This matters for writers because you’re often the first person asked to evaluate whether an AI-generated answer is correct — and the first person blamed if it isn’t.

With traceability, you can pinpoint the issue, fix the source, and improve the system.

Protecting the Integrity of Your Documentation

Writers spend years building controlled vocabularies, maintaining topic libraries, preserving intent, and making sure content stays consistent. Generative AI systems, by design, remix information. That remixing can introduce subtle distortions unless you can see which sources were used and how the system transformed them.

Traceability helps you catch:

Pulls from outdated drafts

Blends of two similar topics

Responses formed from misinterpreted metadata

Answers based on content that never passed review

👉🏼 It gives writers back the visibility they lose when AI systems automate pieces of the workflow.

Preparing for AI-Driven Content Operations

As CCMS platforms and documentation tools integrate AI, traceability will become part of the content lifecycle.

Future authoring and publishing workflows will include:

Metadata showing which topics influenced an AI-generated suggestion

Logs showing which versions of a topic were used

Audit trails showing how an AI assistant arrived at its edits or summaries

Review tools that highlight AI-originated content for approval

👉🏼 Writers who understand traceability will be positioned to help design these workflows, maintain the content behind them, and ensure trustworthy outputs.

What This Means for Technical Writers

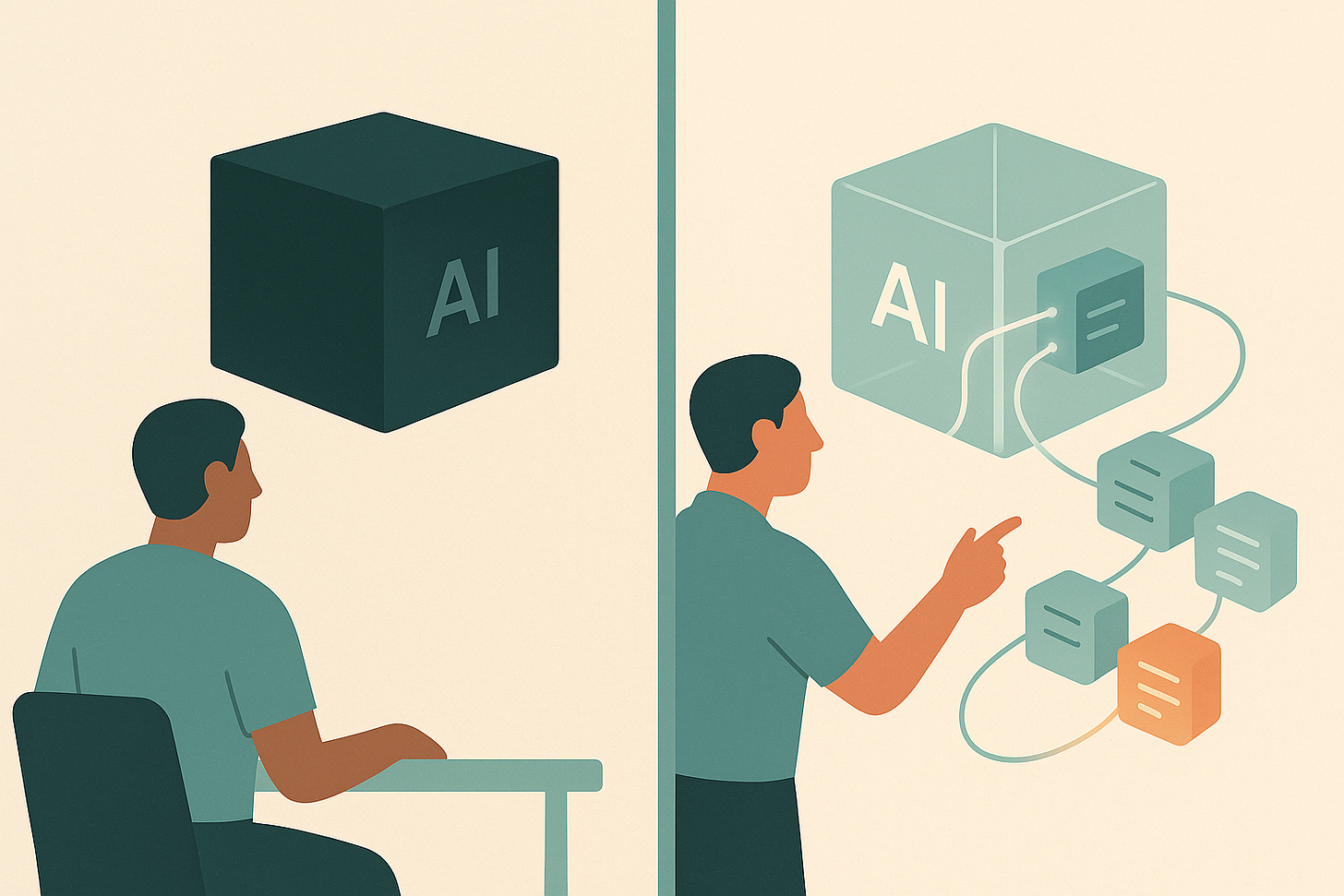

Traceability is the safety layer that makes generative AI viable in professional documentation. It gives technical writers the ability to confirm accuracy, protect content integrity, debug AI behavior, and meet governance expectations.

Without it, AI becomes a black box — impressive, fast, and completely untrustworthy.

With it, AI becomes a powerful extension of your content operations. Writers remain in control. Documentation stays aligned with the truth. Teams can scale with confidence. 🤠