Why Tech Writers Should Care About AI and Critical Thinking

Researchers discovered that using AI made work feel easier — maybe too easy

There’s a new Microsoft Research paper called The Impact of Generative AI on Critical Thinking. It basically asks: now that AI can write our drafts, have we forgotten how to think?

The researchers surveyed 319 knowledge workers — the kind of people who use “ideate” unironically — and found that the more we trust AI, the less thinking we do.

Confidence in the machine goes up; cognitive effort goes down. Apparently, ChatGPT is like that friend who always offers to “help” and somehow ends up doing the whole group project.

What The Research Uncovered

The researchers discovered that confidence is a funny thing — especially when you hand it over to a machine. The more people believed the AI knew what it was doing, the less they felt compelled to double-check its work. It’s like trusting a toddler with scissors because he looks confident.

Meanwhile, people who actually trusted themselves — their own judgment, experience, and ability to spot nonsense — behaved differently. They stayed skeptical, poked at the AI’s output, and asked, “Really?” instead of “Cool, done.” In other words, they thought like adults in a room full of autocomplete suggestions.

Overall, the study found that using AI made work feel easier — maybe too easy. Tasks that once required mental sweat suddenly felt effortless, like switching from lifting weights to just looking at them. That’s great for short-term productivity but not so great for keeping your cognitive muscles in shape.

Critical thinking hasn’t vanished; it’s simply relocated. We’re no longer wrestling with ideas from scratch — we’re rewriting prompts, squinting at AI responses, and deciding which parts to keep, which to toss, and which to pretend we wrote ourselves. Thinking still happens, but now it shows up wearing a project-manager badge instead of a writer’s cap.

What the researchers found

Confidence in AI = less critical thinking — The more people believed AI could handle a task, the less they bothered to double-check it.

Confidence in yourself = more critical thinking — People who trusted their own brains were more skeptical, analytical, and careful.

AI makes things feel easier (maybe too easy) — Workers said using AI reduced the effort of thinking. That’s efficiency today and brain atrophy tomorrow.

Critical thinking hasn’t disappeared (it’s just moved) — Instead of brainstorming, users spend time refining prompts, inspecting AI output, and deciding what (if anything) to keep.

Why technical writers should care

Let’s be honest: if you’re a technical writer in 2025, you’ve probably spent more time talking to a chatbot than your manager. Generative AI now writes so fast, so confidently, and with so few bathroom breaks that it’s easy to feel like the intern who’s been politely replaced.

The Microsoft researchers found something both unsurprising and terrifying: the more we trust AI, the less we think.

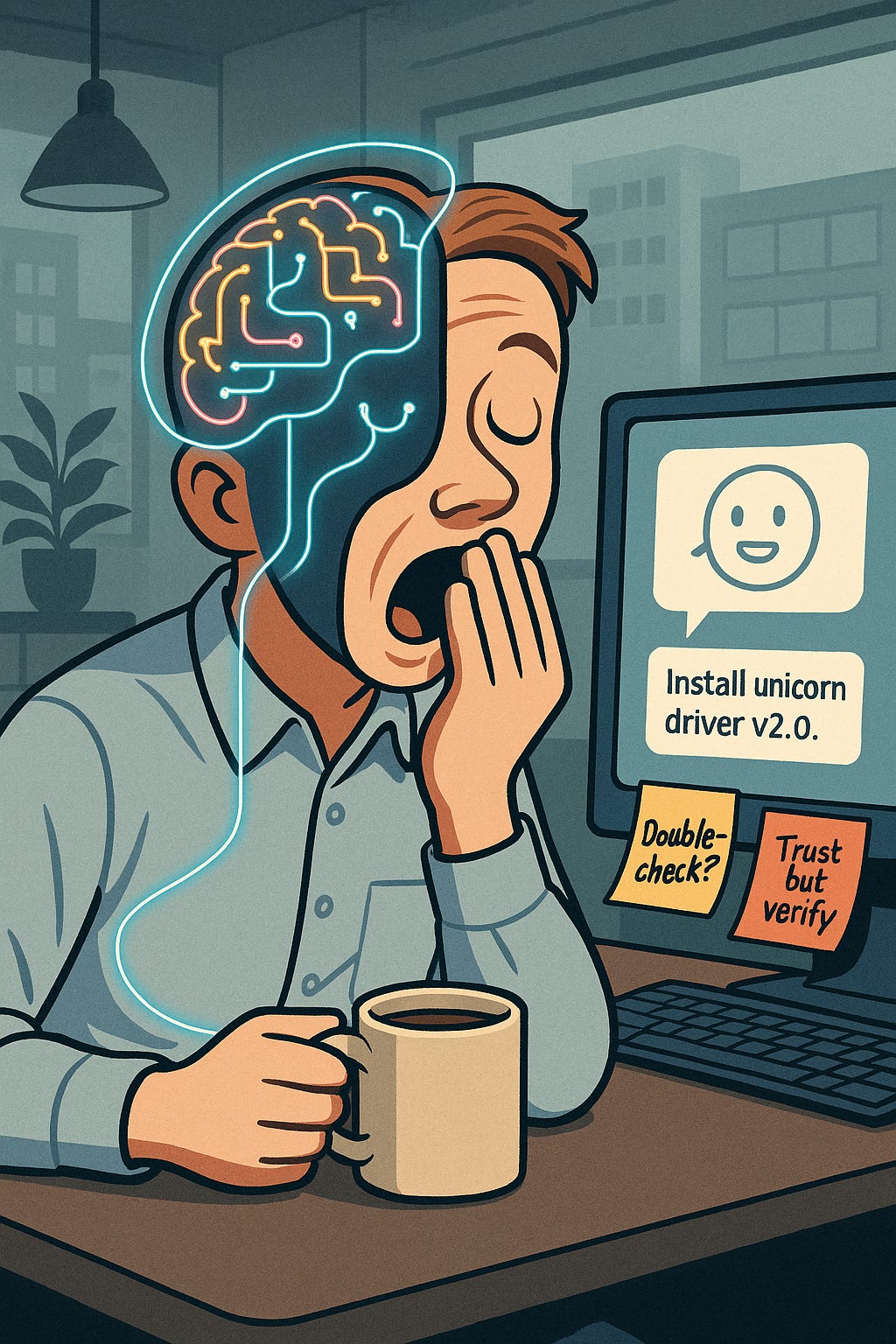

Give it a prompt, get a paragraph, and suddenly you’re nodding like it just delivered gospel truth. “Looks solid,” you say, as the AI confidently misidentifies your product’s operating system and invents an API that doesn’t exist. You hit publish, and two hours later a customer files a ticket asking where to find the ‘unicorn driver v2.0.’

When you trust the tool too much, your brain quietly clocks out. You go from writer to AI babysitter — someone who scrolls through machine output, red pen in hand, muttering, “Well, that’s not wrong, but it’s not… right, either.” The study calls this a “reduction in cognitive effort.” You might call it “letting the robot do the thinking because you’re tired.”

But there’s a twist. The people who didn’t lose their edge were the ones who trusted themselves more than the machine.

They second-guessed, re-phrased, and applied that deeply human art of suspicion. They read a sentence like “Simply recompile the kernel to enable joy mode” and thought, “Wait — what?” Confidence in your own expertise, it turns out, is the antidote to overconfidence in the algorithm.

AI also makes work feel easier — deliciously, dangerously easier. Why sweat over phrasing when the bot can spit out twenty options before your coffee cools? The problem is that this ease becomes a habit. Like skipping the gym once and then deciding walking to the fridge counts as cardio, we start outsourcing not just the writing, but the thinking about writing. Then one day, you’re responsible for safety documentation, and you realize your critical-thinking muscles have the tensile strength of pudding.

The study says critical thinking hasn’t disappeared; it’s just changed outfits. It used to look like drafting and revising. Now it looks like prompting, reviewing, and integrating — which is corporate for “trying to make the robot sound like it’s met a human before.” We don’t brainstorm anymore; we quality-check hallucinations. It’s a different kind of thinking, but thinking all the same.

Of course, the usual culprits make this harder.

Time pressure.

Unfamiliar topics.

The seductive ease of “good enough.”

You tell yourself you’ll review the AI’s work properly later, right after lunch, right after this meeting, right after this other meeting that could’ve been an email. By the time you get back to it, the content’s already published and your manager is forwarding you screenshots from a user forum titled “Documentation Gone Wild.”

So here’s the takeaway: AI won’t make you stupid, but it’s happy to help if you let it.

Your job isn’t just to write anymore — it’s to stay awake. To be the adult supervision in a room full of eager algorithms. To question everything that looks too smooth, too confident, or too eager to please.

Because if you don’t, you’re not collaborating with AI — you’re proofreading its delusions.

If you ever find yourself thanking AI for “making your job easier,” check whether “easier” really means “I’m no longer thinking.” Microsoft’s study suggests we’re outsourcing our judgment one autocomplete at a time.

So stay curious, second-guess the bot, and keep that critical-thinking muscle flexed. Because when your AI-generated release notes tell users to “sacrifice a goat before rebooting,” someone has to catch that — and it’s probably not going to be Copilot.

Practical Recommendations for Tech Writers

If you’re going to make peace with AI in your writing workflow, you’ll need to learn to manage it like a bright but unreliable intern — eager, fast, and occasionally unhinged. The research makes it clear: using AI well isn’t about pushing a button, it’s about structuring your thinking around the tool.

Begin With A Clear Objective

It starts before you even touch the keyboard. Know what you want. Every solid output begins with a clear objective — not a vague hope that the machine will magically intuit your intent. Write down what you’re actually asking for: a concise installation guide for version 2.1 on Ubuntu 20.04, an FAQ aimed at new DevOps engineers, or a migration checklist for the next release. The clearer you are, the less likely you’ll end up approving something that sounds polished but says nothing.

Interrogate The Output — Trust Is Earned, Not Generated

Once the AI delivers its shiny new draft, treat it the way you’d treat a first-year technical writer’s first attempt — as a starting point, not a finished product. Your job is to interrogate it.

Does it match the right context?

Did it assume Windows when your users live in Linux?

Are those commands even real?

Does the tone sound like your brand or like a robot trying to make friends?

And most importantly — what’s missing?

The AI is good at generating content to fill space; it’s not good at identifying gaps. That’s your territory.

Your domain expertise is your best defense here. You know what’s accurate, what’s risky, and what’s likely to make your support team groan. Don’t let the AI’s confidence trick you into lowering your standards. When you trust your own knowledge, you naturally engage in more critical thinking — and the research shows that’s exactly what keeps human writers sharp.

Move From Using AI To Training A System That Works For You

It helps to build a lightweight review process specifically for AI-assisted work — a kind of hygiene routine for co-authoring with machines. Start with clear prompts and constraints, review for validity and missing context, decide what to keep or discard, then polish for tone and consistency. After publication, take notes on what worked and what didn’t so your next round is smarter. That’s how you move from “using AI” to “training a system that works for you.”

But don’t forget to write by hand — metaphorically speaking. Every so often, start from a blank page. Draft something without AI’s help. It’s how you keep your cognitive muscles from turning to jelly. Because while efficiency is nice, creative and analytical stamina still matter — especially when the stakes are high.

Pay Attention To Avoid Embarrassing Snafus

Speaking of stakes, not every piece of documentation deserves the same level of trust in AI. A quick internal how-to? Fine. A safety-critical procedure or compliance guide? You’d better be reading every line like it’s your name on the liability form. The same speed that saves you time on easy work can sabotage you on important work if you’re not paying attention.

Finally, don’t let your organization fall into the “AI does it all” fantasy. Educate your stakeholders. Explain that while AI can accelerate the writing process, it doesn’t replace judgment, fact-checking, or brand consistency. Show them your workflow — the planning, the review, the integration.

Let them see that what they’re paying for isn’t just words; it’s the thinking behind them.

That’s the real secret: in the age of generative AI, the writers who thrive aren’t the fastest — they’re the ones who remember to think. 🤠