The Real Issue Isn’t AI — It’s Accountability

Sarah O’Keefe argues that organizations are chasing the wrong goal with generative AI

In AI and Accountability, Sarah O’Keefe argues that organizations are chasing the wrong goal with generative AI in content creation. Many businesses describe success as producing “instant free content,” but that’s a flawed metric. The true organizational goal isn’t volume of output — it’s content that supports business objectives and that users actually use.

AI Produces Commodity Content

O’Keefe points out that:

Content is already treated as a commodity in many marketing teams

Generative AI excels at producing generic, average content quickly

That’s fine for low-level output (but it doesn’t create quality, accurate, domain-specific content on its own)

When the goal is high-value content, simply doing “more” with AI fails

O’Keefe mirrors broader industry cautions that AI is very good at pattern synthesis but struggles with original, accurate, context-aware creation without structured inputs.

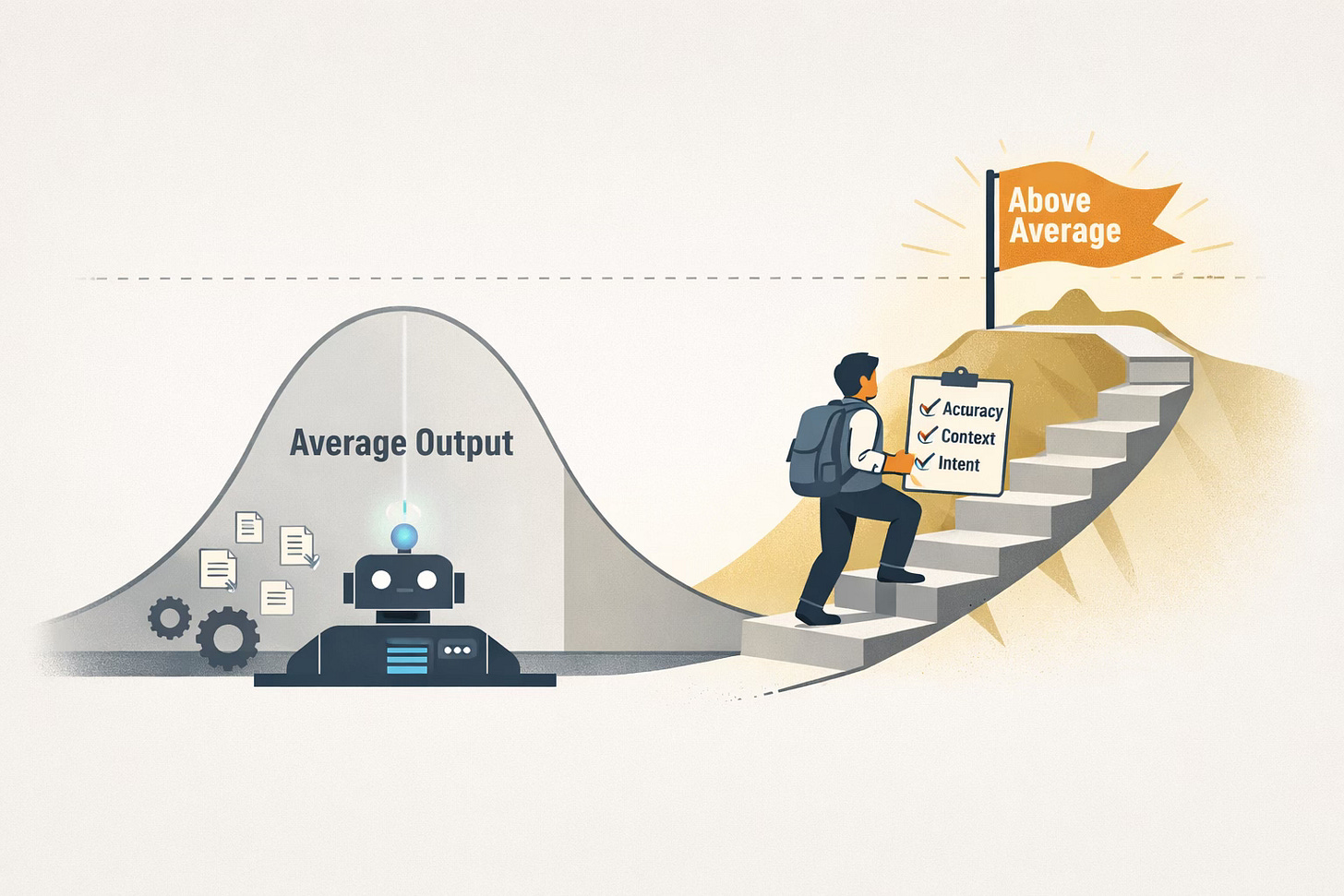

AI Can Raise Average Quality — But It Won’t Raise Above Average

O’Keefe emphasizes that:

If your current content is below average, AI might improve it

If your content needs to be above average, generative AI does not reliably get you there without expert oversight

This performance gap stems from how AI models generate outputs — they produce the statistical average of what they’ve been trained on

This is an important distinction for tech writers whose docs must be precise, technically correct, and trustworthy.

Accountability Doesn’t Go Away With AI

A central theme is: authors remain accountable for the accuracy, completeness, and trustworthiness of content they publish, regardless of whether AI assisted in generating a draft. O’Keefe gives practical examples:

AI can article-spin but still generate bogus reference

AI won’t justify legal arguments or identify critical edge cases

Errors from AI are still your responsibility if you publish the content

This has real implications for technical documentation where accuracy and liability matter.

The Hype Cycle Isn’t Strategic

O’Keefe warns that focusing internally on “how heavily we’re using AI” is a hype-driven metric, not a strategic one. Tech writers and content leaders should reframe the conversation:

From “how much AI are we using?”

To “how efficient and reliable is our content production process?”

To “how good is the content we deliver for its intended use?”

This shift helps teams build accountability into their workflows instead of chasing novelty.

What This Means for Tech Writers

For tech writers specifically, here are the distilled takeaways:

AI should be a tool — not the goal: Use AI to handle repetitive, well-defined tasks, while keeping humans responsible for correctness and intent

Focus on quality over quantity: Audiences value clarity and accuracy more than volume

Maintain author accountability: Even when AI suggests or drafts content, the author still owns the final product’s correctness and accuracy, especially for technical and regulated content

Measure impact, not usage: Shift performance evaluation from AI adoption rates to content effectiveness and user outcomes

👉🏾 Read the article. 🤠